#Background

Back in the 1990s, when the Internet was still a young baby, the speed of the Internet was slow, and the capacity of the Internet was small. The main content on the Internet is text, so the search engines invented that time can search texts, and those search engines work really well on text. But the internet has developed really fast in the last 10 years, new technology makes it faster and stronger, people are surrounded by images and videos(visual information), how we can index images and search them is the new challenge. Some search engines like Google can search images, and we are interested in how they can search using images, so we did some research in the reverse visual search field.

Compared with text search, reverse visual search is much more difficult. When doing text search, we can query text, find sentences with query keywords, but how do we search images? Or, what should we find when querying an image? Well, that depends on what kind of image we are querying.

To do reverse visual search, we should have a set of images to search, after all, we are searching images, not creating images. In this project, we will do reverse visual search on the LFW dataset, which is a dataset of human faces. We take an image file as an input query and return results related to the image, that is, identify people’s faces and find similar faces in the dataset.

#Related work

Visual search is a relatively new field of study in Artificial Intelligence, hence there aren't many related works and applications in this field, most algorithms in visual search including feature extraction and hashing, where feature extraction extract the features of an image, and hashing the features so the complicated visual information can be represented in a one dimensional array.

Several important application in visual search:

Google image search

Bing image search

TinEye

Pixsy

#Dataset

Since we are doing reverse visual search, not creating images, we need a dataset.

Labeled Faces in the Wild is a benchmark dataset for facial verification. LFW contains 13,233 images for a total of 5749 people. Among these there are 1680 people with two or more images. We will do reverse visual search on this LFW dataset.

Doing reverse visual search on a face dataset is like face recognition, we are given a bunch of images, of different people's faces, as candidates.

we need to find the similar faces for a given target face. If the person in the target image has other images in the dataset, we need to find them, if not, we can find similar faces.

We have tested several neural networks on embedding extraction, including VGG16, mobilenet, inceptionv3, ResNet50, xception, and find ResNet50 is better than others on efficiency and accuracy.

#First approach

In this approach, we replicate a simple but easy understanding method, which has two parts, the first part is building a KNN index, and the second part is searching using an image.

#Create KNN reference index

- Use a pre-trained neural network, remove the last layer.

- Push the images into the neural network, since the last layer is removed, we will get image features(embeddings) from the endpoint of the neural network.

- Build a KNN using the embeddings above.

#Query image

- Push the image into same neural network, extract the embeddings

- Compare the target image embeddings with all the embeddings in the dataset, find the top similar images using KNN

#Results

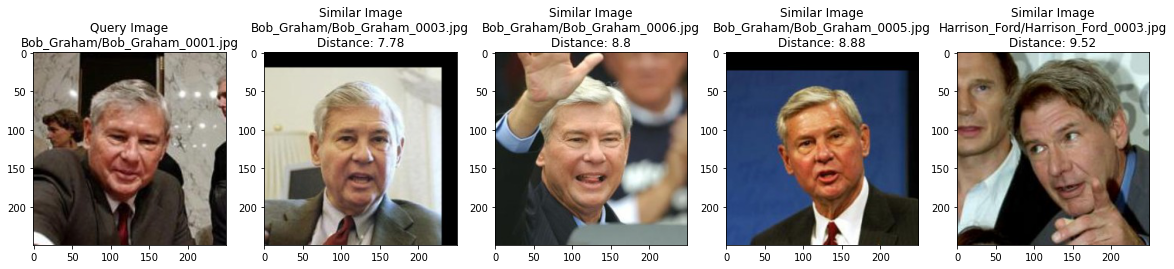

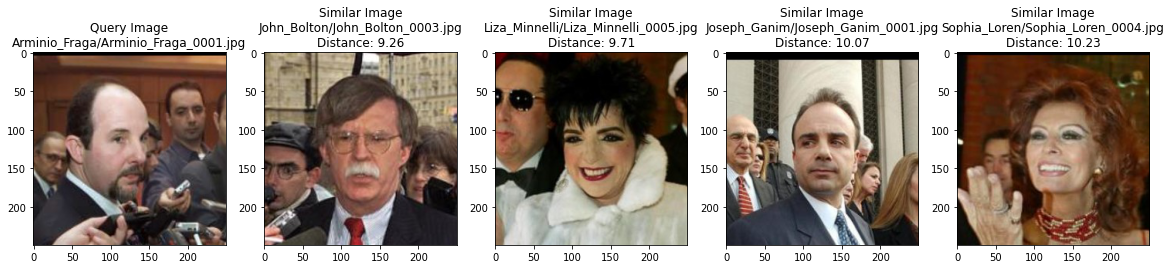

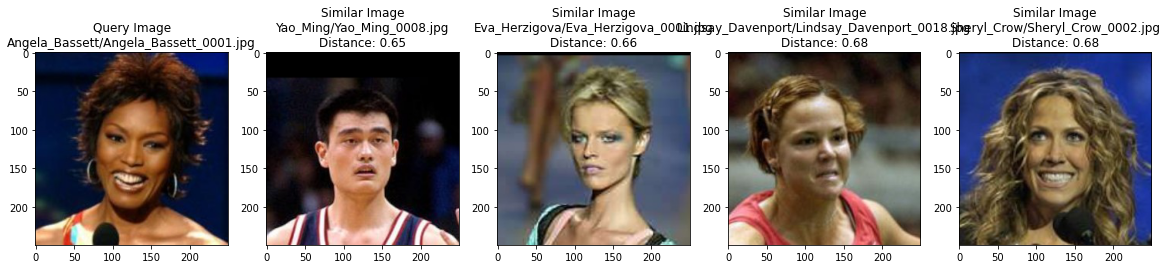

Following images are results using above approach

#Improvement

The accuracy using the above method is lower than expected, so we are trying to find some way to improve it. Check the above approach again, we realize the main reason the accuracy is low is that we are using the whole image to do the image search, but we are not interested in the whole image, we just interested in part of the image.

So, we first crop the region of interest, then extract features from the ROI only.

For doing reverse visual search on human faces, we are interested in the face part, not the background, e.g., the place where the person stands does not matter, we don't care if he is sitting in a room or laying on a beach, we don't care if he is wearing a red T-shirt or black suit. So, we detect the human face and then use the face part only.

Luckily, there is already some developed methods for doing this. We chose FaceNet to detect the face, and then crop the facial part.

Now, the steps of doing reverse visual search are

- Detect the ROI

- Crop the ROI

- Extract features from the ROI

- Build the KNN using above features(called embeddings)

And

- Detect the ROI

- Crop the ROI

- Extract features from the ROI

- Compare the target embeddings with all the embeddings in the dataset, find the top similar images using KNN

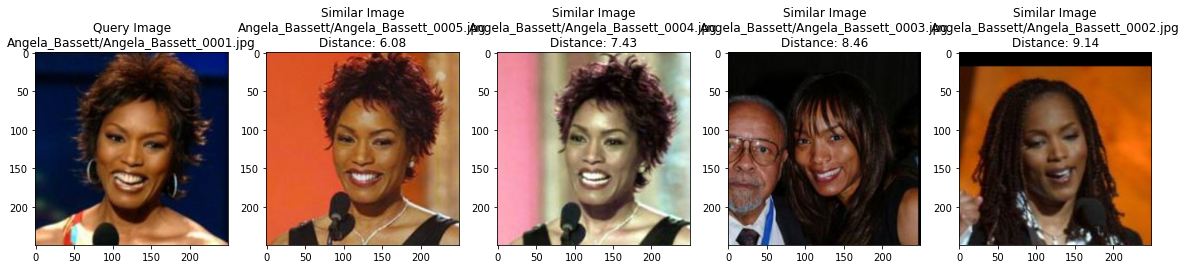

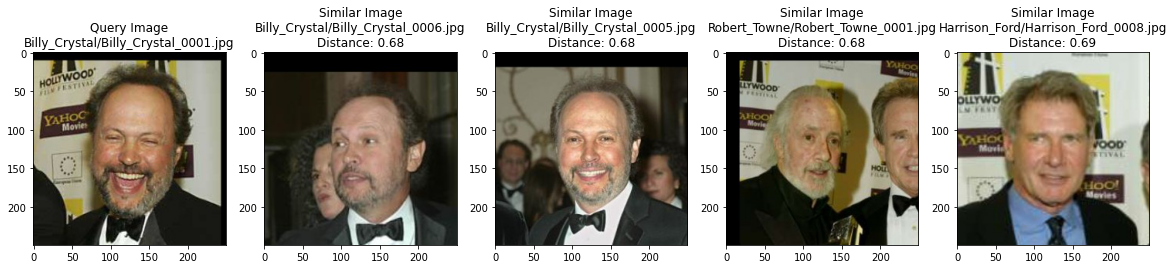

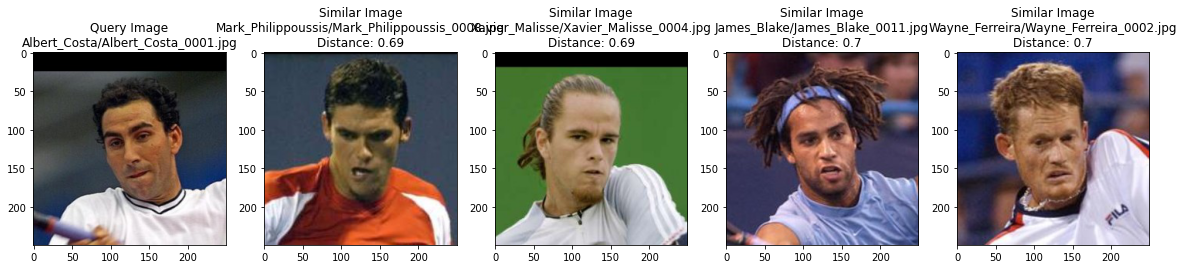

#Result